Original date published: April 7, 2024

Foundation Models for Robotics Series Part 5 - High Level Planning with Foundation Models and In-Context learning for Decision-Making

In this part of the series we considered approaches for how LLMs can be used for high level planning in robotics

LLMs can be used for high-level task planning for performing long-horizon robot tasks. In robot planning, the plans can be broken down into two levels: High-Level Plan and Low-Level Plan. The high-level plan defines the set of trajectories the robot needs to go through to reach its goal state, it is usually abstracted from the robots hardware and constraints. The low-level plan on the other hand defines the motor controls required to execute the trajectory steps defined by the high-level planner. In robot navigation this is also referred to as the global planner and the local planner. The use of LLMs as high-level planners has become popular in robotics research because of their generalizability and reasoning capabilities. Figure AI [2] a new robotics startup aimed at building general purpose humanoid robots has partnered with OpenAI to implement its intelligence capabilities. While the exact details of the implementations of this partnership is not open, in a recent interview the founder and CEO of FigureAI Bred Adcock shared that they are using OpenAI foundation models for high-level planning for their robots while using other control learning algorithms such as reinforcement learning for low-level planning to enable robot manipulation [3]. In this part of the series we will be exploring existing work on this area of using LLMs for high-level planning.

LLMs can be used for translation of language instructions for task specifications, the SayCan architecture uses an LLM for high-level task planning and then uses a learned value function to ground these instructions in the environment [4]. Temporal logic is useful in imposing temporal specifications in robotics systems. NL2TL proposes transforming natural language instructions into temporal logic using a sequence-to-sequence transformer model [5]. They achieve this by fine-tuning a T5 model on 28K natural language to temporal logic pair dataset.

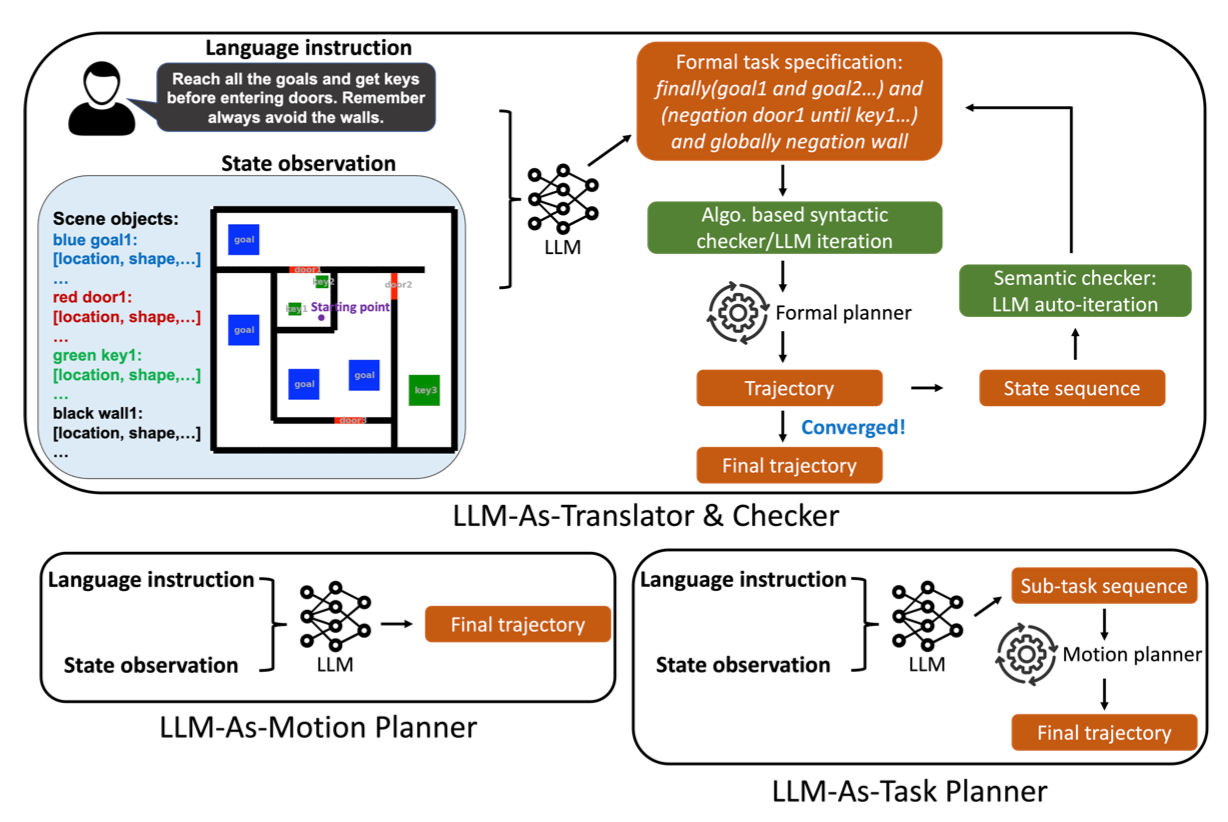

LLMs can also be used to translate natural language task descriptions to intermediary task representations, these representations can then be used by a task and motion planning algorithm to jointly optimize task and motion plans. This architecture was used in AutoTAMP for autoregressive task and motion planning with LLMs as translators and checkers [6].

Different approaches for using LLMs as task and motion planners as shown in AutoTAMP [6].

Another common approach for applying LLMs to high level planning is code generation using LLMs for task planning. This approach uses code generating language models to generate a sequence of sub-tasks which are represented in code to be executed by the robot. The LLM autonomously composes API calls and generates a new policy code when given new commands.

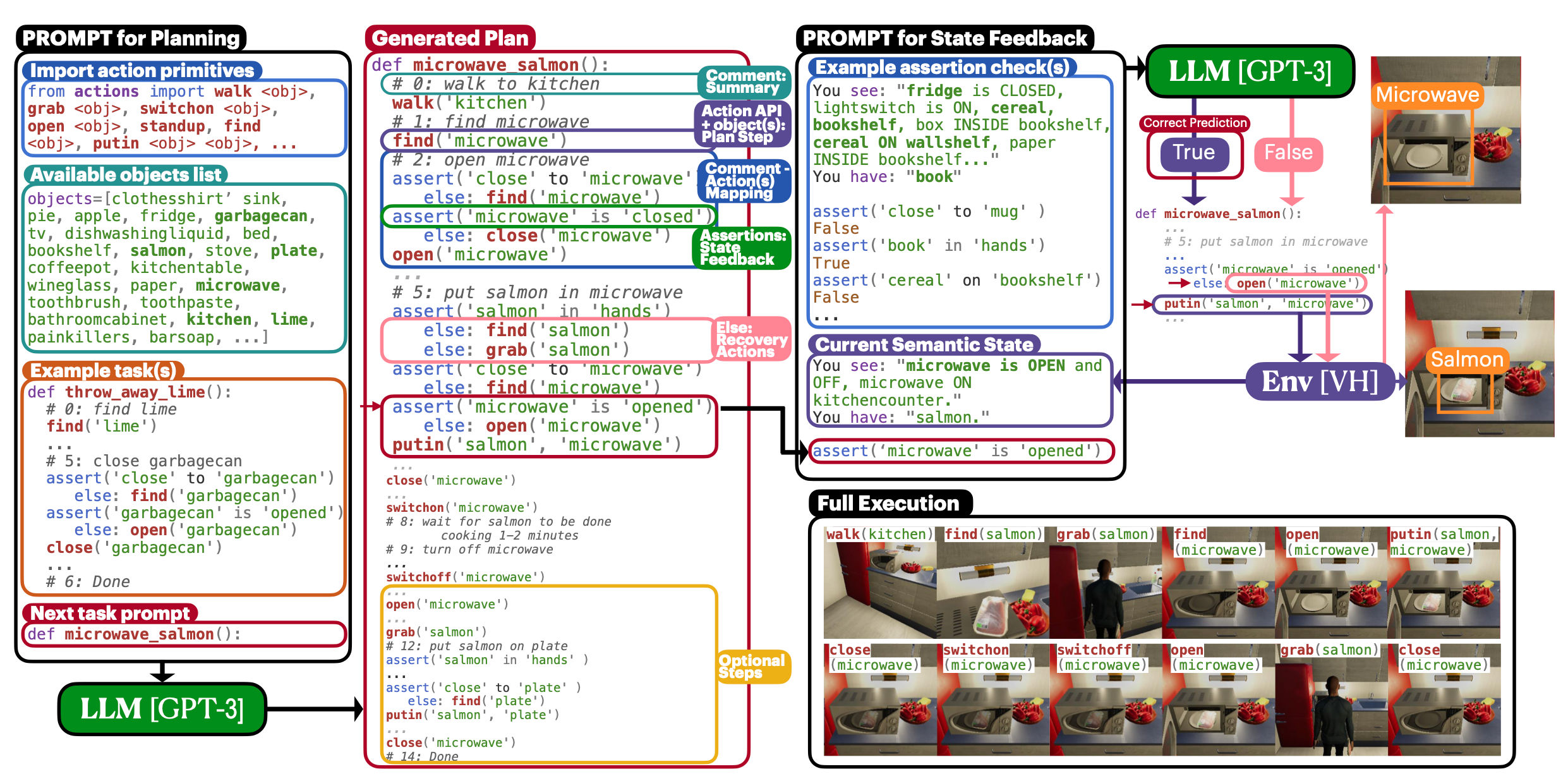

Classical planning algorithms requires extensive domain knowledge and large search spaces, LLMs can be used to generate sequences of tasks required to achieve a high-level task. ProgPrompt provides an initial prompt to the LLM which contains specification of the available actions, objects in the environment and example programs that can be executed, this additional context information allows the LLM to generate sequences of actions directly without any domain knowledge[7].

ProgPrompt prompting architecture for generating sequence of actions as code [7].

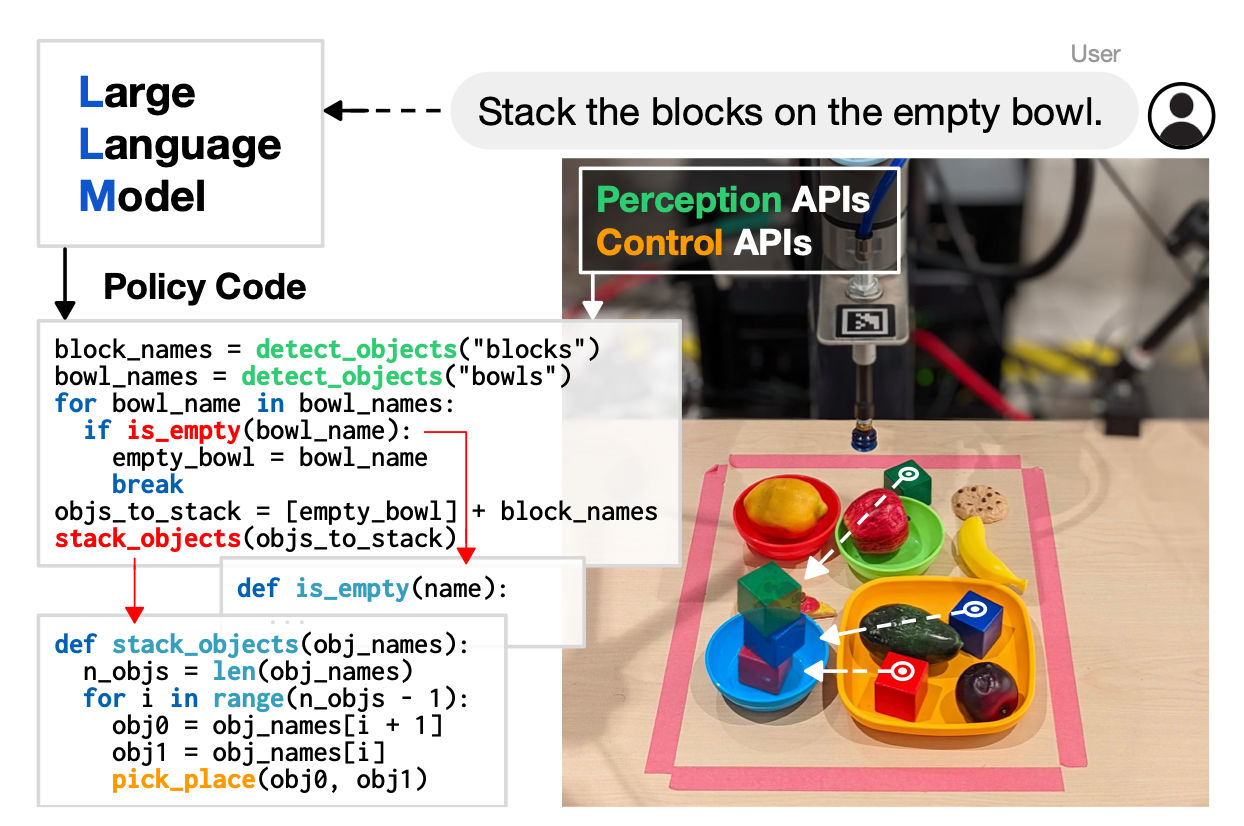

Code-As-Policies explores the use of code writing LLMs to generate robot policy code based on natural language commands [8]. This work considers robot navigation and manipulation tasks using real world robot platform from EverydayRobotics. The study shows that LLMs can be repurposed to write policy code by expressing functions or feedback loops that process perception output and invoke control primitive APIs. The authors utilize few-shot prompting , where example language commands formatted as comments are provided along with the corresponding policy code. The LLM can generate robot policies that exhibits spatial-geometric reasoning, generalizing to new instructions and provide precise values (e.g velocities) for ambiguous descriptions such as “faster”. These policies can represent reactive policies such impedance controllers, as well as waypoint-based polices such as vision-based pick and place or trajectory-based control. A crucial aspect of this approach is the hierarchical code generation process, which involves recursively defining undefined functions.

Code-as-Policies uses LLMs to generate python code which can then serve as robot policies to execute a given task [8].

In-context learning operates without the need for parameter optimization, relying on a set of examples included in the prompts.

Chain-of-Thought is a prominent technique within in-context learning [9]. It involves executing a sequence of intermediate steps

to arrive at a final solution for complex, multi-step problems. This capability allows for the application

of LLMs in scenarios such as offline trajectory optimization and in-context reinforcement learning.

Conclusion

In this part of the series we considered approaches for how LLMs can be used for high level planning in robotics, we primary focused on 3 categories: language instructions for task specifications, code generation using LLMs for task planning and in-context learning for decision-making. We also gave an example of how these ideas are being applied in the real world by highlighting the recent partnership between FigureAI and OpenAI.

References

- Foundation Models in Robotics

- Figure AI

- Bred Adcock interview with No Priors

- SayCan: Do as I can, Not as I say

- NL2TL: Transforming Natural Languages to Temporal Logics using Large Language Models

- AutoTAMP: Autoregressive Task and Motion Planning with LLMs as Translators and Checkers

- ProgPrompt: Generating Situated Robot Task Plans using Large Language Models

- Code as Policies: Language Model Programs for Embodied Control

- Chain-of-Thought Prompting Elicits Reasoning in Large Language Models

Last updated at: April 7, 2024